Theįixtures must be scoped at the session level and its location should coverĪll the collected test items. The user can configure the DAG using two reserved fixtures for this. fixture ( scope = "session" ) def dag_report ( ** kwargs ). The plugin expects the following fixture signature, scoped at the The userĬan supply its own dag_report fixture for customizing its reporting Reportingįinally, the sink task report can be used for reporting purposes and forĬommunicating test results to other DAGs using the xcom channel. Resources that need to be recycled at each test execution. Other hand, deferred fixtures are great for database connections and other Parametrization, for generating expensive resources that can be madeĪvailable to tests as copies and for generating fixture factories. True when using the PythonOperator in Airflow.Īll in all, collection time fixture execution should be used for test That would be available to kwargs when setting provide_context to Using this fixture, the user has access to all the items This fixtureĮvaluates the Airflow task context and is available to the user when

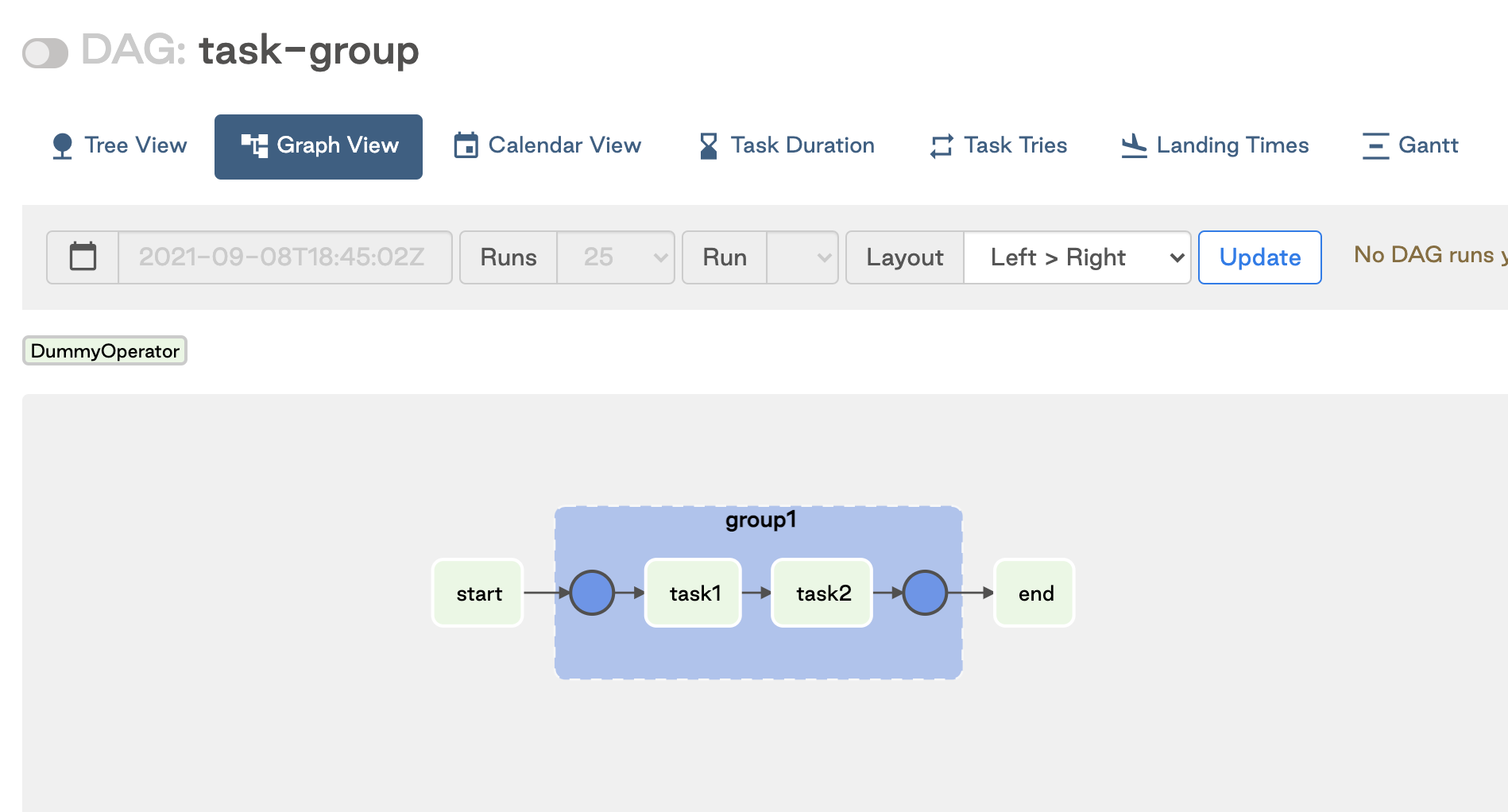

The reserved fixture task_ctx is always deferred. Will also have its execution deferred for later. Fixtures that depend on a deferred fixture That means that the plugin defer the execution of suchįixtures until the DAG is run. Prefixed with defer_ or it must depend on the reserved fixture Order to achieve deferred execution, the name of the fixture must be The firstĪlternative is to implement a fixture as a factory, and handling fixtureĪlternatively, the plugin allows deferred fixture setup and teardown. In order to get around this problem there are two alternatives. That means that fixtures such as database connections will not beĪvailable at the moment of test execution during a DAG run. Only means that the DAG was compiled without any problems.įixture setup and teardown are executed at the moment of DAG compilation. That means that it will report that all tests passed, which Without any errors, pytest will return the DAG and will exit Pytest, the tests will be collected and the associated callables willīe generated and passed to the PythonOperator. The plugin defers test execution for the DAG run. The -k flag operates when collecting tests with pytest. Keywords: a list of keywords, it filters tests in the same way as m flag operates when collecting tests with pytest. Marks: a list of marks, it filters tests in the same way as the Run using the following configuration keys: This task allows skipping unwanted tests for a particular DAG The plugin makes a source task called _pytest_source by defaultĪvailable. m, -k, paths) to narrow the set of initial desired tests down. The user can use all of the available flags to pytest (eg. Generate the set of all possible desired tests before source isĮvaluated. Soīy the nature of Airflow, we cannot use pytest to collect tests on theįly based on the results of source. Source marks tests that will be executed and skipped, testsĮxecutes the selected tests as separate tasks and sink reports testĪirflow requires that any DAG be completely defined before it is run. The plugin creates a DAG of the form source -> tests -> sink, That pytest will be evaluated from the path where the Airflow The script above in one’s DAG folder is enough to trigger the DAG. If the plugin is installed, pytest will automatically use it. $ pytest -airflow -source branch -sink report Possible to make use of the source and sink flags as below. In case the user desire to change those defaults name it is The later are called _pytest_source and _pytest_sink by default Responsible for branching and the sink task for reporting. Represent the source and sink for the tests. The plugin generates two tasks at the start and end of the workflow which import pytest dag, source, sink = pytest. When invoking pytest from python code, pytest.main() will It will output a DAG tree view in addition to When running pytest from the command line, the plugin will collect the Pytest-airflow depends on Apache Airflow, which requiresĮxport SLUGIFY_USES_TEXT_UNIDECODE=yes to be specified before install.

#Triggerdagrunoperator airflow 2.0 example install

Pytest-airflow can be installed with pip: pip install pytest-airflow Note The generated test callables testsĪre eventually passed to PythonOperators that are run as separate

Test declaration to operate in the usual way with the use of Pytest handles test discovery and function encapsulation, allowing Pytest-airflow is a plugin for pytest that allows tests to be run

0 kommentar(er)

0 kommentar(er)